Location in the Visual World

VPS and other AR developer tools from a geospatial viewpoint

Can't say what I'm doing here

But I hope to see much clearer

After living in the material world— George Harrison

Landmarks and navigation

In a basic map reading course, you may come across several methods of navigation. All of these involve you having a paper map, with a goal of using it to find your way from Point A to Point B, perhaps through a rural landscape. It is not as obvious as navigating a city, where street names and simple grid or radial geometry make directions as easy as “follow 33rd Street for 5 blocks then take a left on Harrison Avenue”.

Instead, you find yourself plotting a distance along a road, or perhaps through a forest. You may be counting your paces, knowing 52 steps is 100 meters for you. You may then be counting 156 steps along a bumpy dirt road before turning 90 degrees (magnetic—referring to your compass) into the forest for another 150 meters where you should find a helicopter landing site. For military training, this is a very standard exercise.

Land Navigation, as this subject is called, consists of moving over landscapes with no technological aids, and facing challenges such as moving at night, avoiding roads, and using mental tricks to make complex navigation easier. A very common tactic is to use landmarks and terrain features as waypoints. You can also “dead reckon”, just going in a fixed direction and distance, or “handrail”, following a river or road as a guide to get near a destination.

Yet landmarks and environmental context are powerful assets when navigating. When you come across a water tower or a particularly prominent rock, a stream crossing, or tear-drop shaped pond, a specific S-shaped curve in a road… you can use and of these to recalibrate your position—even if the map is lost, committing these to memory can get you to your destination and tell you how far along you have come.

You have likely done something similar: you walk until you see a fountain, turn left, then at the bridge you will see a tall yellow building with balconies and flowers. This is your hotel. You may have never seen it before, or received a written guide by email from your hosts, or gazed at a photo from TripAdvisor, to make arriving easy.

All of this is anchored in the visual positioning system inside your brain.

Maps are not always maps

If you ever code in Python, you may be familiar with the term map. A map function will take some function, like multiplying the input by 5, and then apply it to a list of items. For example, if you have a list that includes 2, 5, and 7, you can map this function to get back a list with 10, 25, and 35.

As someone who uses Python often for geospatial problem solving, I early on had some small annoyance that the map function was not referring to something geospatial. However, it in turn reminds me that the word map is something that exists independently of these visual diagrams of planet earth. You can map the brain, you can map the relationships between economic variables, you can map anything without it being a geographic matter.

However, you can also map the world, or a location, without what we think of as a map. Mapping is not so much cartography, as much as matching one set of things to a another—like your physical location within a paper atlas.

The Songlines

A fascinating example of this non-visual, non-Cartesian coordinate type of mapping comes from Australia. Many years ago I read Bruce Chatwin’s travel writing classic, The Songlines, which gave a great outsider’s view to how some indigenous people have a millennia old method of mapping the landscape.

To quote one source on the definition of these songlines or “dreaming tracks”:

One of the most famous of these is that the songlines served as a means for navigating the land. It has been asserted that by singing certain traditional songs in a specific sequence, people were capable of navigating the land, often travelling across vast distances across all types of terrain and weather, without getting lost. This is due to the fact that numerous features in the landscape are contained in the songlines, thus allowing them to be used as oral maps.

These descriptions of journeys can again be very similar to some of the directions we explored above. Consider it in the context of other cultures as well: you could describe a journey along the Danube in Europe by naming various cities, fortresses, and bridges in places like Vienna, Budapest, the Chain Bridge, the river delta on the Black Sea, and so on. You could describe various geysers, canyons, and lodges on the highway in Yellowstone National Park, or the shapes of mountains in the Karakorum range as one treks through and across the rugged path.

Recognition from experience

If you suddenly wake up, and find yourself teleported to a city with an imposing wrought iron tower on the horizon, you may instantly recognize it as the Eiffel Tower. You can quickly draw a conclusion: I am in Paris! Unless of course, the scene also includes palm trees and a high rise hotel, in which case you are probably in Las Vegas.

If you have visited either of these locations in the past, then you are informed by your own experience. However, maybe you have never traveled to either of these places, and instead you’ve seen them on flashcards with photos of world cities. Or maybe you’ve scrolled Instagram enough to become familiar with the scene.

Whatever your source material, some past experience allows you to classify the scene and therefore guess your location, with a strong confidence (or 100% certainty). If you have an even deeper level of familiarity, especially if you have visited the location or perhaps spent time exploring a 2D map, or a 3D model, maybe playing a video game that takes place there or watched a few documentaries focused on the place, then you may be able to very accurately guess exactly where you are standing. And given any photograph of the place, also guess where that photographer was standing, within a very precise radius of a meter.

It all depends on your level of familiarity. Imagine even further that this is your backyard, or your favorite plaza or park, the inside of your kitchen, anywhere that is truly and intimately familiar to you. You’d likely immediately get a mental image of the observer location given any photograph of the place.

Awaiting GPS Signal

Let’s drive right into the point of this discussion: you can know where you are, with great precision, without relying on GPS. And so can your mobile phone.

In 2018, Google showcased their Visual Positioning System (VPS) at Google I/O. And it was built into Google Maps as Live View—by default indicating that this was a geospatial and navigation related tool. It is also part of Google’s cutting edge ARCore developer tools, in the ARCore Geospatial API which allows places anchors—an emoji, a note, a golden coin—within a 3D scene.

Google writes in the linked documentation about the relationship of VPS and GPS:

Using GPS and other sensor data alone to determine location is not usually sufficient to achieve the precision required for AR applications. The Geospatial API uses device sensor data (such as GPS), and captured image data to determine precise location. It creates an image map that is processed to find the recognizable parts of the environment and matches them to the VPS localization model. The result is a very precise location and pose (position and orientation) for your app.

Google also describes present and future plans:

With the Geospatial API, you can place an anchor almost anywhere in the world at a given latitude, longitude and altitude. 3D building geometry is a still developing part of the VPS localization model, and placing anchors on geometric planes will be supported, too, as enhancements to these and other aspects of the VPS localization model are planned for future releases of the Geospatial API.

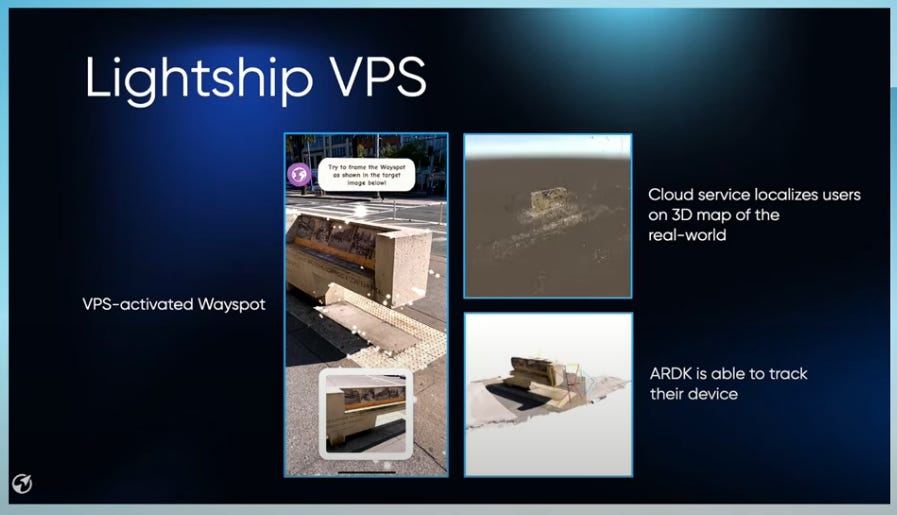

This technology is present in several products and ecosystems beyond Google Maps. Niantic’s Pokemon Go is a great example, and Niantic also announced its VPS service for developers at the Lightship Summit. Snapchat has similar work going on, and in many ways any technology, any open source library like OpenSfM, and any product that works with computer vision combined with spatial computing is on a similar track.

VPS and Augmented Reality

VPS is naturally associated with Augmented Reality (AR), because of the way it enables AR services. It serves as one of several bridges between the more legacy geospatial topics like maps, data, location, and the world building that demands more than legacy systems typically offer. As companies like Niantic and Google announce further inroads into AR, their developer toolkits also grow.

Niantic’s Lightship VPS for developers is one of several tools described at the recent summit. It includes a coverage API, where the user sends their query location and a radius, then searching for VPS coverage areas within that radius.

Niantic claims only a single photo frame is needed to localize a device, and with a 4G connection it should happen within 1 second. This process only works in a limited set of places worldwide, generally called Wayspots.

A Wayspot is a publicly accessible real world location or landmark. Only some of these are actually VPS enabled, and the Wayspot API provides access to these.

To summarize, a general workflow seems to be:

Get user location (using GPS, apparently)

Querying coverage API for list of Wayspots nearby

Request the scene data for these Wayspots in the Wayspot API

Localize with the Wayspot, getting exact user location

Begin using AR to view or create content

Currently Niantic is calling this toolset experimental and encouraging use of the Niantic Wayfarer app to add more coverage.

Street View as a Foundation

Niantic’s Lightship VPS coverage was highlighted in a brief map during the Lightship Keynote, showing a few top cities where it is functional. It is easy to notice that even in these cities, it is actually not universal coverage, but certain locations—probably specific landmarks or scenes that users helped map:

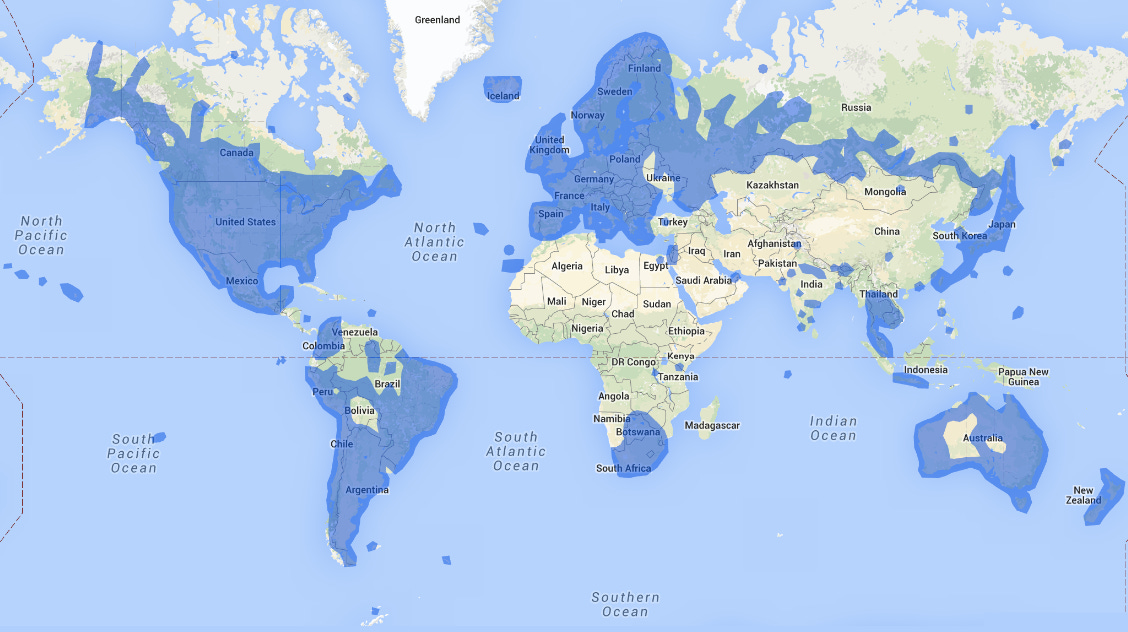

Meanwhile, the Google Developers blog describes the coverage extent of Google’s VPS available in the ARCore Geospatial API:

…users can be anywhere Street View is available, and just by pointing their camera, their device understands exactly where it is, which way it is pointed and where the AR content should appear, almost immediately.

In case you have not seen this map floating around, here is an estimation of the global street view coverage that Google has:

Zooming in matters, however—it’s not like literally every road in Colombia is covered for example. But pretty close. A Wikipedia page for Google Street View shows a different way of estimating coverage by country, as well as an agenda of planned new coverage by country and year. Colombia, Chile, Thailand, Sri Lanka, Japan, Italy, Mexico, USA, South Africa, and many others have “mostly full coverage”:

Overall, it appears there is a clear correlation between immersive videos, 360 degree imagery, or other visual data collection and the functionality of VPS. This makes sense: VPS needs a reference to match against, just like GPS pings a satellite. AR will seem to be limited to places where this 3D world has been built, so street view coverage maps might be an indicator of where AR-ready devices will have a market.

Major questions about the future of VPS arise, such as whether crowdsourcing can provide needed coverage, whether lower quality images and videos can achieve the same results as professional Google Street View imagery, and how quickly VPS can start failing if available imagery is out of date.

Presumably, a constantly refreshing 3D world map is needed, based on various sources like street view imagery, videos, snapshots of landmarks, satellite imagery, aerial surveys, LiDAR scans, and whatever else might move the dial toward freshness and detail. There are several strategies in place to generate as much of this type of data as possible.

Google has expanded the ability of the community to add to official coverage—while also uploading various 360 and still images through Google Maps reviews—while Apple also is expanding Look Around, Mapillary continues to grow its crowdsourced imagery coverage, and Niantic is promoting its Wayfarer app in which users make 3D scans of areas specifically to unlock VPS. Data collection is a truly cyclical process of mapping a world that never stops changing.

The Grand SLAM

A quick aside—what about Simultaneous Location and Mapping (SLAM)? Is VPS simply another way of talking about SLAM? There is a subtle difference. VPS is the Location aspect of SLAM, but what simultaneously happens with SLAM is the map and world building.

With VPS, a reference model of the world is assumed to already exist, and the user device like a mobile phone, an autonomous golf cart, or AR glasses are comparing real time visual capture against the model to get a location. With SLAM—often a critical part of autonomous vehicle development—the visual receptors, along with others like LiDAR sensors, are also making a new map of the static scene as well as a survey of particularly dynamic objects like other cars or people. It does not assume an existing reference model is accurate and up to date.

SLAM is not also explicitly visual, as it can be entirely done with LiDAR. There are likely many more details to get into, but generally it is good to think of VPS as a specifically visual, image-based system that relies on a reference, while SLAM is a more closed system attempting to both create the reference model and find a location within it at the same time.

Do we need GPS anymore?

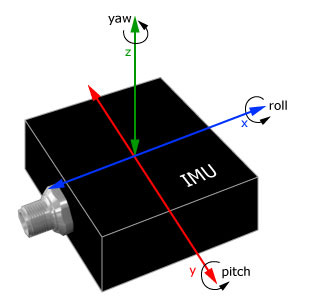

VPS does not eliminate the utility of GPS, not by a long shot. In brief, VPS is mainly useful when GPS is not available for getting a terrestrial location—longitude and latitude—and otherwise can provide the pose of a camera to know its orientation (just like compass angle) but also the tilt and roll. These are still spatial attributes of the observer, but beyond the scope of GPS.

Other sensors also have overlapping utility in regards to VPS, such as an accelerometer or the somewhat similar Inertial Measurement Unit (IMU). These devices can sense motion and will help for example in providing a compass angle on your mobile map app, or determining camera orientation. An IMU with a LiDAR or camera sensor is helpful for indoor mapping.

There are certainly other relevant sensors with value for world building. Radio waves from radar can be helpful for underground mapping, and also are effective in satellites. Many examples exist of using Bluetooth beacons and Wifi signals to get position where GPS is not available. Alternative approaches like magnetic sensors and ultrasound start to achieve very similar effects to world building and mapping, but micro-level in medical contexts.

VPS is a constantly improving method based on decades of small advancements, but one of the most significant things is how a camera and an algorithm can now achieve something that previously relied on far more intricate devices (not to say a mobile phone is less than intricate). Niantic, Google and others all also cite how GPS is often used in conjunction with VPS to improve performance and results. It is not disrupting the need for these other sensors entirely, as much as enabling location services in more difficult environments.

VPS and new possibilities

Widespread use of VPS will be most important in consumer devices like the mobile phone. Currently, much of it is visible in the form of games and trinkets, such as Pokemon Go or Snapchat. Google has started indicating a more relevant day to day use with navigation, but it’s rare that users want to navigate with their phone in from of their face (yet we do need to constantly glance at it in a complex navigation environment).

Augmented Reality is the main use case that relies on VPS—any way of overlaying data on the world, whether meshes like painting a building purple or glints and points like dropping a golden coin in a park.

The practical uses require more imagination today, but surely will become reality soon. OpenStreetMap data, for example, is still highly based on 2D map locations, and it is very rare that OpenStreetMap has 3D models fully linked to it, nor visual scenes fully integrated.

Integrating VPS with OpenStreetMap, Google Maps, or other consumer map platforms means retrofitting many datasets to ensure a restaurant location, a building polygon, or a road are connected to their “digital twin” in a 3D model. VPS then is able to tell you what particular restaurant or street corner you are on, whether you are looking for navigation or doing construction.

Technologies like Unity and Google Earth start to merge 2D maps into the 3D world. But 3D data is more than just a raster tile or mesh—it can be countless objects that are entities within the scene. This is where OpenStreetMap and GIS vector data outside much of the 3D technology that consists of scans of the world without labels and concrete divisions. Using VPS against a 3D model where objects have labels and properties is an even bigger possibility than simple positioning.

Data collection has the opportunity to improve due to VPS as well. Collecting OpenStreetMap data, you can often rely on blurry satellite images or misaligned datasets, so placing a fire hydrant or a food stand on the map can come with a high tolerance for inaccuracy, with 1-2 meters being “close enough”. Using VPS to visually map the world, and scan specific objects, can mean getting very precise locations of map data, and also treating data not as points and lines and polygons, but instead as their true shape.

A picnic table located at 9.6866591, 46.7679656 is a point location, but says nothing about how wide it is, the height, and what amount of physical spatial it really occupies. Something like SLAM and VPS and be used to map, scan, and reference this table rather than abstract it to an x and y location on a plane. A mobile phone user can point a camera at it and instantly know they are at the agreed upon location for lunch today.

To sum it up in one phrase, probably the biggest opportunity around VPS and its adjacent technologies is the realization of AR, but the most practical use for the mapping community is the ability to shatter past expectations of precision by continually improving the detail of a 3D map of the world.

Invisible Cities and Worlds

Let us conclude now with a quote from Italo Calvino’s Invisible Cities, as his protagonist describes Zaira, one of many cities in the novel (all referring truly to some aspect of Renaissance Venice). Zaira is akin to one of these worlds that VPS queries for location:

The city, however, does not tell its past, but contains it like the lines of a hand, written in the corners of the streets, the gratings of the windows, the banisters of the steps, the antennae of the lightning rods, the poles of the flags, every segment marked in turn with scratches, indentations, scrolls.