Street View: A History

How imagery visualizing the scenes along routes came to be

Today's post is sponsored by OpenCage, makers of a highly available, simple to use, worldwide, geocoding API based on open datasets like OpenStreetMap. Test the API, see the docs or the pricing (hint: it's radically cheaper than Google). Street view, street-level imagery, photospheres, and other names: all describe a concept where images are geotagged, and often sequential, giving an immersive perspective on the ground along routes that can be driven, ridden, walked, sometimes even floated or skied or climbed. Most people will think of Google Street View when discussing this topic, because it was launched in 2007 and evolved alongside Google Maps and many familiar user experiences centered around interaction with maps and navigation.

Street photography

The oldest version of street view goes significantly further back, and is debatable. In the early days of film, short clips taken from a car or horse carriage were used to convey the atmosphere of a city or neighborhood, the likes of which you may find in history documentaries on Youtube today, like this one from Berlin in 1900. These are of course only views from the street, but are not the thing harnessed into spatial data.

Photographing specific objects as portraits and for future reference also has its history almost as old as photography, such as France’s Commission des Monuments Historique, but this documentation is not quite panoramic or exhaustive in capturing sequentially along a road.

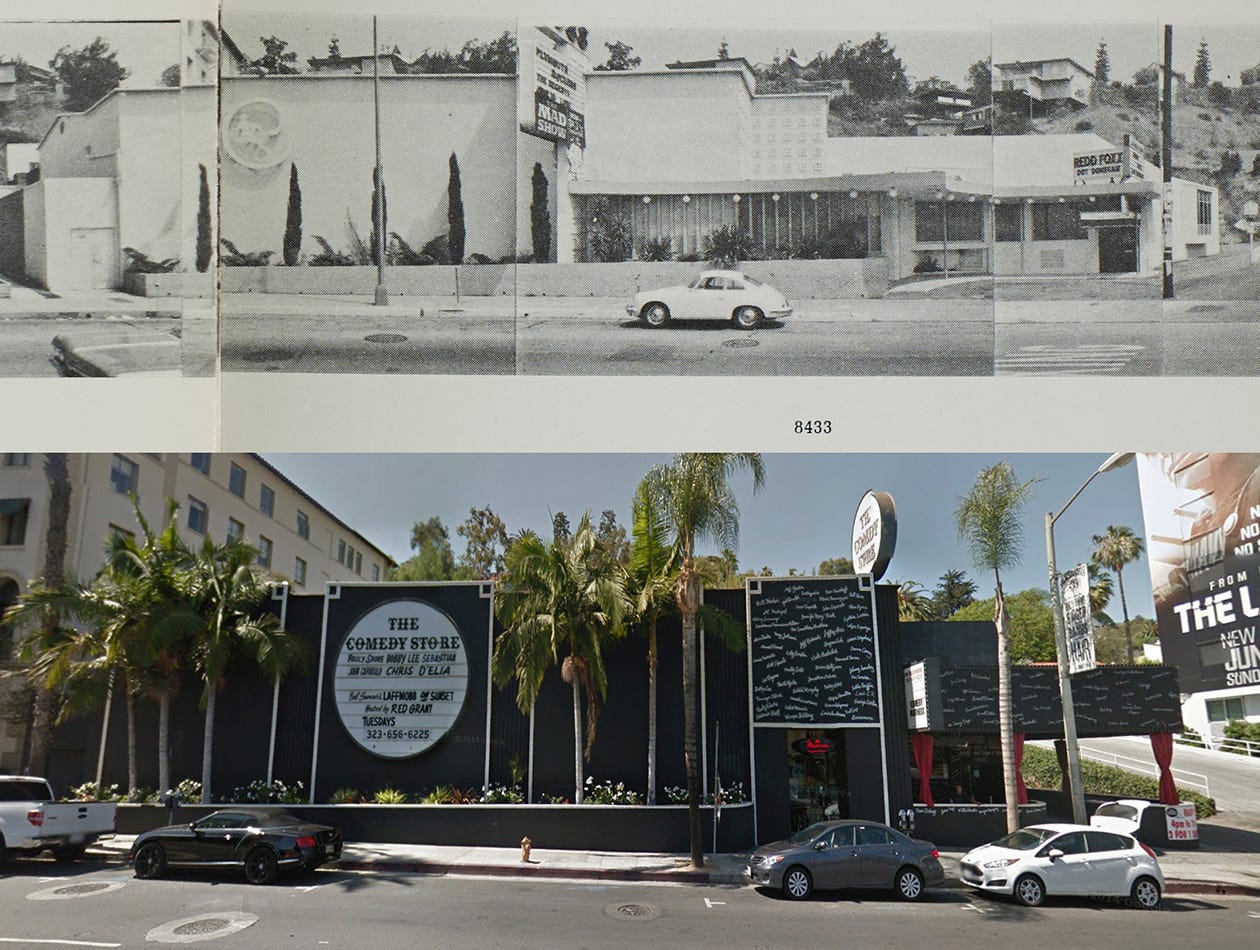

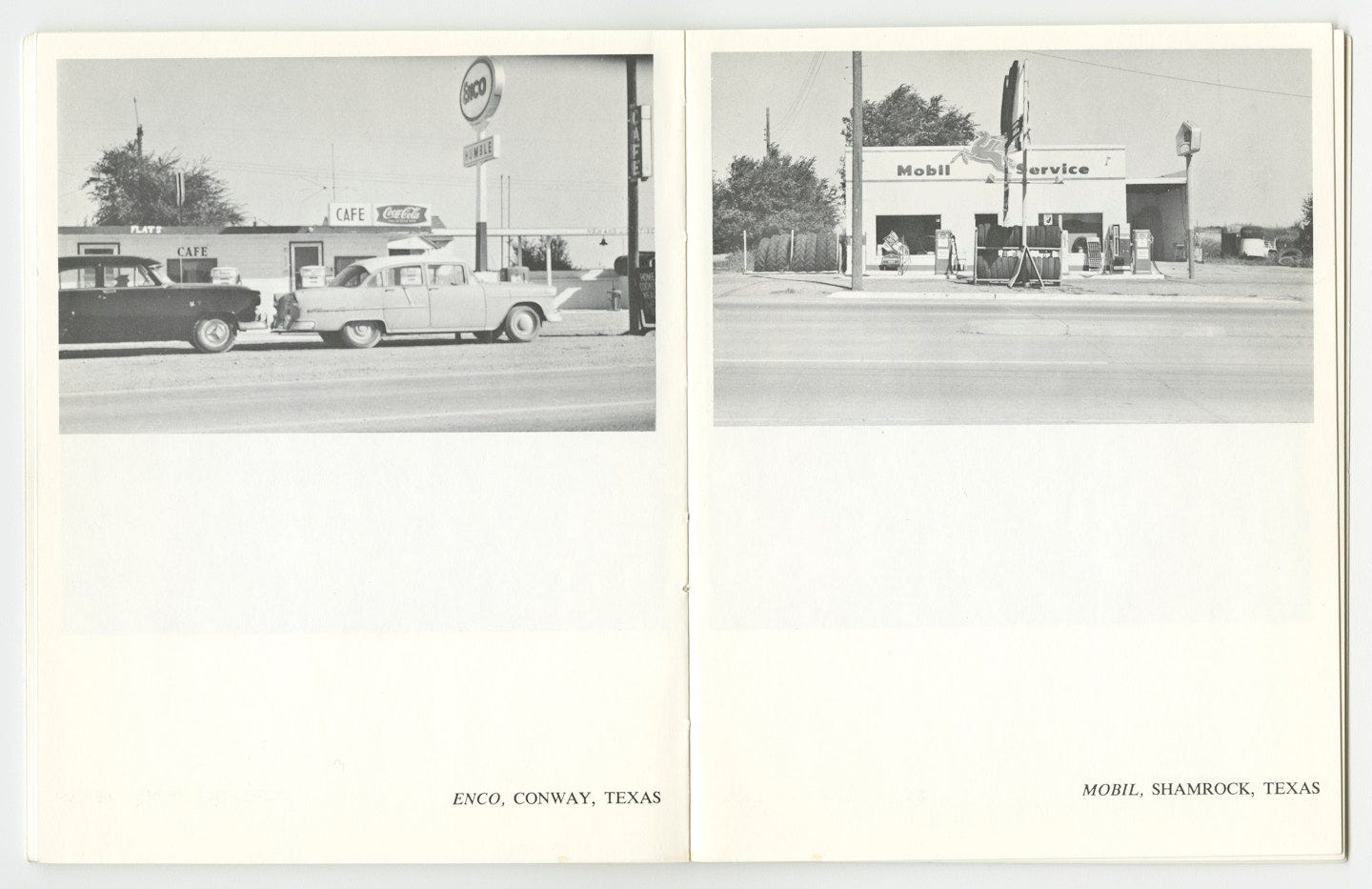

One of the original minds behind really capturing the street for a purpose of providing a view to the public is 87-year-old Ed Ruscha and artist of many talents. While much of his work is in graphics and painting, one of his earlier publications is called Twentysix Gasoline Stations and in an indexing of modern industry presents photographs without glamour of exactly what the title describes. What is remarkable about it from a data collection point of view is that these are not famous landmarks—which photography often focuses on—but rather an arbitrary view of what is there. In the early days, this could also make for some profound art to contemplate, for some.

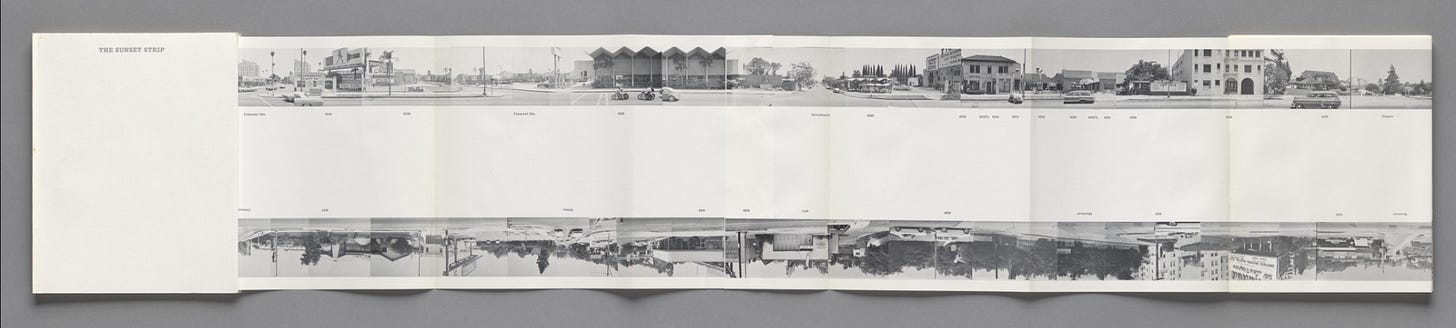

Ed Ruscha later made two more ambitious projects along these themes. He did what Google Street View and its relatives would later do—he captured photographs in sequence, with an aim to provide a panoramic view of an entire street, particularly focused on the left and right sides rather than straight ahead. His 1966 anthology Every Building on the Sunset Strip again delivered what its title promised.

Ruscha would go on to release others like Thirtyfour Parking Lots (1967), and did another panoramic street view publication focusing on Hollywood Boulevard. Ruscha’s work can be found in photography museums around the world, and is primarily in print rather than digital. While physical photographs did not have a geotag, nothing geographic in Ruscha’s heyday of street photography was digital at all, and simply photographing the street was similar to aerial imagery from the sky, in that it needed stitching (which he did) and then left the work to specialists to match it to graphical maps and specific geographic coordinates.

So what happens next? How does this artistic approach to documenting urban structures go on to become a staple of map user experience?

The Aspen Movie Map

Ruscha’s artistic approach in photography was succeeded, however unintentionally, by a later more video-based approach that was highly experimental, and had a military connection. 16mm cameras were mounted on a vehicle and captured video frames in four directions in the mountain town of Aspen, Colorado in 1978. Funding came from the US Department of Defense’s DARPA, for the purpose of using visual captures to make a model of a town for military mission planning.

The Aspen Movie Map was presented as an interactive tool for exploring the town. The user could move in any of the four directions (front/left/right/rear) and could even select buildings visible along the street to see additional interior photos. There was also an overlay map. It sounds oddly similar to what we still use today on the web!

Road surveys

About a decade after Ruscha’s books were published, the camera was put to work for a more practical and mechanical approach to documenting the condition of roads, with the lens facing forward. The New York State Department of Transportation started their Photolog Unit in 1975, using a 35mm “Right-Of-Way (ROW) camera” with a 3300x2400 pixel resolution. Until 2003 this camera was used with 5 year update intervals, before digital cameras were employed and 2 year intervals began.

Other states followed suit. The new digital cameras essentially were a dashcam, but with information overlaid about which milemarker or other linear referencing position the image was at (rather than needing a longitude and latitude).

CityBlock

While many states were still using analog cameras to capture ROW imagery, a group of students at Stanford University were working on a project similar to Ed Ruscha’s photography that sought to more mathematically address the problem of how to visualize urban landscapes while minimizing photographic distortion. This became the CityBlock project at the Stanford Computer Graphics Laboratory. Larry Page, one of Google’s founders, challenged the team to use a video he made while driving into a summarized set of images.

The project went on to capture imagery with a camera mounted on a vehicle tailored for specific angles and specifications. This control of the camera type and mounting—getting consistent quality, pose, then relating multiperspective images and applying distortion fixes—would be key to the future of Google Street View. The research eventually was folded into the Google Street View product in 2006.

The Swiss Connection

CityBlock was not the seed for Google Street View all by itself. In Lucerne, Switzerland a company called Endoxon was an early pioneer of many of the geospatial products employed by Google, and focused on regional map data collection. While Endoxon did not have a street view product available for public use, it had used cameras to capture street-level imagery in the 1990s for use in documenting the places and details along roads, perhaps including addresses.

The Big Idea

The concept of documenting the street in images and video was long established, and while the Aspen Movie Map made an initial proof of concept, companies like Endoxon had moved to scale the technology for surveying the street panoramically while US government agencies (and others in Japan and more) focused on pointing the camera forward to survey the road surface. Altogether these were quite silent applications, but the engineering director responsible for Google Street View in the early 2000s, Luc Vincent, cities the original idea of Google’s product as coming from Larry Page.

Page’s video for the CityBlock project had a motivation behind it, which was to find ways to make these videos taken from a vehicle be more useful to a large global audience like Google had for its search engine, and soon Google Maps. After collecting imagery in a few cities and launching it on Google Maps, an uptick in traffic out of interest for this new feature confirmed that it was helping drive the popularity of the map service against competitors like Yahoo.

Scaling

Google Street View began to grow. From early on, Google had also been researching computer vision technology for face blurring, which now is applied widely to the imagery. Court cases in places like Austria limited the capture of imagery in public places, while in Switzerland a ruling enforced strict anonymization, limited camera capture height to 2 meters, and otherwise laid out other privacy-focused restrictions.

The rest is history, for the most part. Google Street View grew more, covered various countries, ski resorts, insides of museums, and started to allow some user contributions, while contracting independent drivers around the world. Even today, the Google car makes frequent appearances on public streets around the world.

The Competition

Various other street view type services have existed, perhaps too many to document. Everyscape launched its product in the early 2000s, and included Aspen, Colorado, home of the Aspen Movie Map, as one of its first locations. It later was the foundation of Microsoft Bing Maps’ Streetside product, which still exists but does not update so frequently. Companies like TomTom and HERE Technologies have leveraged vehicle fleets to get imagery, while smaller firms like Cyclomedia or SiteTour360 do independent contract capture but have certifications for being up to Google’s standard.

Google’s head start and deep investment in street view is difficult to compete with, however, and few have been able to come close. Crowdsourcing arrived on the scene shortly after Google had already reached peak fame of Street View, with Mapillary appearing in 2014 and becoming neutral to map platforms, thus integrating with OpenStreetMap tools, HERE’s MapCreator, Esri GIS tools, QGIS, and various other platforms. Competitors and alternatives within the crowdsourcing space also arrived, such as OpenStreetCam (now KartaView) that was at various points owned by Telenav then Grab. Apple also launched LookAround to complement its Apple Maps product.

Data Access

Google restricted use of Google Street View and its APIs, for example in Esri ArcGIS integrations, while licensing did not allow for it as a reference for editing OpenStreetMap, thus giving community demand for an alternative. Meanwhile, Google Street View images, while often used for various research projects in computer vision and beyond, were not openly accessible as a whole unlike the Creative Commons licenses of the crowdsourced alternatives, making for interesting opportunities as Mapillary grew to millions and up to 2 billion images today, spread across the world.

Google Street View started as a centralized project, with the capture coordinated by the company just like other forms of map data surveying, and later subcontracted, but all still under a single umbrella effort. This is extremely effective when the budget is there, especially in making sure imagery exists exactly where the company wants. Alternative companies relying on crowdsourcing had less influence over where imagery was captured, instead leaving it to users—including local and national governments—to capture where they like then share the imagery openly. Europe proved a hotspot, along with Japan and various other corners of the world, still densely visible on the Mapillary website.

Alternatives

New alternatives also arose, like Panoramax, which also has European roots linked to OpenStreetMap—communities known for taking independent and communal approaches to data, with strong emphasis on open data and open source. Panoramax looked to take a completely opposite approach from Google, being decentralized not just in community contribution like Mapillary, but in also having open source server setups that enable federated hosting. The French government hosts imagery in its own server, while the French OSM community has another, and communities across the globe can do the same—while still supporting the ability to browse all images using the viewer, such as in an OpenStreetMap editor.

Meanwhile, Hivemapper started a different approach: why not incentivize users financially? Issuing a form of cryptocurrency called honey, the company encouraged users to upload dashcam footage of their driving in exchange for compensation, which would be expected to increase in value as the image collection grew and the data became valuable to other parties. Hivemapper merged into a more broad BeeMaps product more recently.

Computer Vision and Advanced Applications

Google remains the original pioneer of street view as a new way of exploring maps for consumers, but also was early in using machine learning and computer vision to extract key information about the world. Recognition of addresses, business locations, entrances to buildings, traffic signs and intersections—all of these have bene large side benefits, or sometimes even the main value add, from analyzing street view imagery. While Mapillary was able to extract the values and positions of traffic signs around the world, other map providers benefitted by updating speed limits, informing drivers of stop signs, and giving more accurate navigation experiences corresponding to road signs and arrows.

Autonomous driving, including Google’s own projects that were also led by street view guru Luc Vincent, as well as those using alternative imagery sources, became very dependent on computer vision and imagery as a data source—sometimes in combination with LiDAR, and sometimes as a complete alternative as Tesla seeks to do.

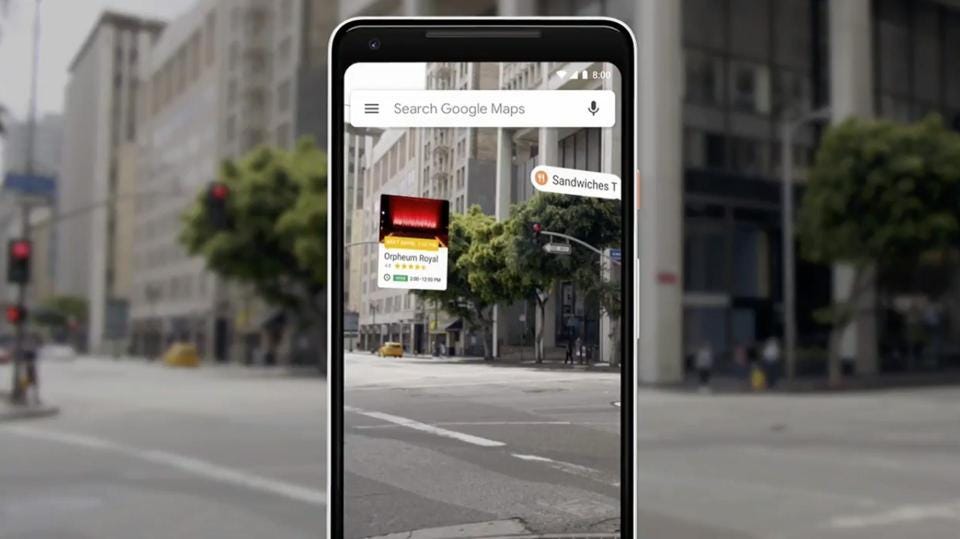

Google also has launched new features leveraging advanced research on spatial computing, including a blending of street view with aerial imagery in 3d maps, where a using can zoom in on a Google Earth type of view and be catapulted from the sky to the street and back again. In addition, Google Live View nudges the user to point the mobile phone camera at the street and drops arrows in the screen to aid in navigation, relying on references to and models from street imagery for estimating depth and corresponding the camera view to the map.

After street view

Street view continues to grow, with new imagery on Google, Mapillary, and more every day. Maps never stop updating, because urban and natural landscapes also keep evolving over time, and new imagery from angles near and far helps index what is where.

Google Street View was an immersive experience from the start, and the future of all technologies that involve a visual capture of the world using human perspective (as opposed to the bird’s eye, satellite, lasers, other spectrums) seems to be gravitating toward more detail and more realism. Google Street View has always been 2d, giving an artificial sense of full reality immersion, but there is a clear striving at Google and across other companies in moving toward something truly 3d, which even the Aspen Movie Map had started to do alongside the photography. Using LiDAR to get 3d imprints of the world, textured and draped with real images, is one approach, while spatial computing aims to give machines an awareness of physical space the way the human mind understands it.

While paid mapping of the world using vehicle-mounted cameras may become less sustainable, especially considering a potentially diminishing value outside of key areas, crowdsourcing is likely to grow due to a dual purpose—if someone is going that way anyway, it’s nice to map the world on the way. Meanwhile, the most populated, visited, and developed areas may see even more detailed mapping with various devices, demonstrating the latest advances in AI, computer vision, spatial computing and more—and Google is likely to remain one of the most important companies in this realm, especially with a massive audience of consumer map users.

The biggest question is how our experience of maps, especially immersive experiences, may change beyond the mobile phone and the computer—what will be the next computing device? It may not yet even be conceived, but the future holds promise.

Also the BBC Domessay project of 1986 had a number of virtual walks.

https://www.domesday86.com/?page_id=811