Synchronization of the Editable World

Maps reflect reality, but can they keep up with the unfolding of time?

Today's post is sponsored by OpenCage, makers of a highly available, simple to use, worldwide, geocoding API based on open datasets like OpenStreetMap. Test the API, see the docs or the pricing (hint: it's radically cheaper than Google). There was a time where fixing a map was akin to updating a book. Once published, the material cannot be updated retroactively, but instead would have to be replaced with a new version. Using old paper maps, it is important to know the year it was printed. In the geospatial industry, this has rapidly changed over the past few decades: the map itself, when digital and cloud native, is commonly assumed to be always current. The data sources, however, are what have a publication date.

Sensing all the time

Sensors—in the realm of “internet of things” or IoT—are one of the most cutting edge technologies for having advanced geospatial data. Some are incredibly simple, for example a thermometer or weather station, even a Raspberry Pi sensing air quality. Even simpler yet, in the visual spectrum, can be a webcam. The powerful thing about these sensors is that they are streaming, rather than at an ad hoc interval.

Satellites can attempt to be streaming too—some are even taking video feeds. Some weather satellites, while not using video, are geostationary and monitor the same area continuously. Aside from satellites, there is crowdsourced data like in Waze and Google Maps, where it is possible to have a streaming feed of foot traffic in a shopping center, automobile traffic on highways, or public transit status.

In many other cases, geospatial data is a snapshot in time and space. Systems like STAC help to navigate this, while many individual data sources, such as Mapillary street-level imagery, are sure to indicate the capture and upload time alongside location of images.

The Dynamic Revolution

All of this may seem normal, if you work regularly with geospatial data—you will nod along, because you understand how it works. In terms of cartography and user experience, however, this is a revolutionary phenomenon that quietly arrived on the scene, as part of the much louder arrival of worldwide internet connectivity, big data, and the static to dynamic shift of Web 2.0.

There is still a catch, or let’s say, a cache. When you use a mobile app, for example, the map tiles are often fetched then stored temporarily, for a few minutes, hours, or days. You may need to manually force a refresh, or you may find it is too data intensive—burning away your mobile data or causing constant lag—to fetch and re-fetch the map data if it is constantly morphing. In many cases this is no issue, and the map data really does update by the minute.

Infinite refresh

OpenStreetMap data is a great example of this. It is very difficult to find a map—a cartographic design—that updates more than every 2 weeks. The official OpenStreetMap Carto style that deploys as a raster tile on the OSM tileserver does update amazingly fast. The OSM API (Overpass) also reflects changes almost immediately, although this is indeed data and not a map. Meanwhile, things like Daylight Distribution, which packages up OSM data minus a few errors and messiness, and which has migrated into Overture Maps, release every 2 weeks at best. This affects many data providers, who also have a 2-4 week lag in their map data being current.

OSM is actually remarkable in that it is one of the fastest updating map sources around, and it embraces this rapid deployment as a priority even when it can mean mistakes get deployed and must be reverted, rather than caught early and blocked.

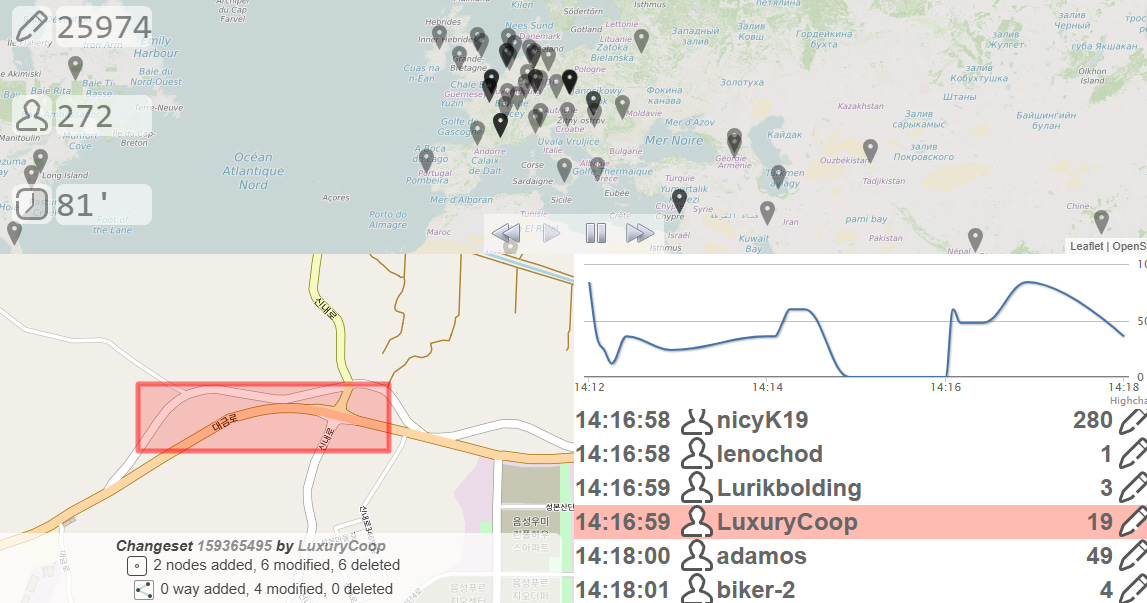

OSM refreshes in an effectively streaming way, but this is only because the edits coming into OpenStreetMap are also streaming. There is even a live map of where edits are being submitted. The data is there, but most visualizations of the data still only redeploy at lagging intervals—today, you will see last month’s world.

Why the deployment lag?

The 2 week lag of map deployment comes with advantages. It gives time to catch and fix errors before they go into production, potentially having widespread impacts on peoples’ daily lives or business operations. It also saves money, depending on the complexity of turning map data into a visual tile—certainly it is more expensive to compile and ship a global map every minute, particularly as vector data, than to do it twice a month. How many users really need all the data (as opposed to none, or only a small portion of it) to be real time updated? Probably very few.

Going more and more cloud native has, however, enabled maps and their sometimes enormous data with complex structures to become more dynamic in every way. Protomaps, for example, can read directly from a cloud native GeoParquet or other hosted data source and render a map. The map can be as current as the GeoParquet, although this still tends to be twice a month updated at best.

The lag might go away, but even with technical solutions, there is still this fundamental seed of doubt in just how reliable a streaming map of the world is without checks and controls.

The editable world of yesterday

A global map database is the accumulation of all human spatial knowledge. Friedrich Hayek wrote in his work “The Use of Knowledge in Society” about how no one person has perfect information about the economy, in terms of forecasting needs of the market or predicting what will happen. Everyone holds a fragment, and by the time someone—a financial analyst let’s say—is able to gather all the distributed viewpoints, they are already outdated.

This is the same with geospatial data. With a small dataset, it is less true, but the larger the dataset, there is a skyrocketing risk that parts of it are outdated. If one part is outdated, it is not enough to simply assume that fragment has an issue and the other 99% is okay—no, that fragment can have ripple effects, because everything is connected, especially nearby things.

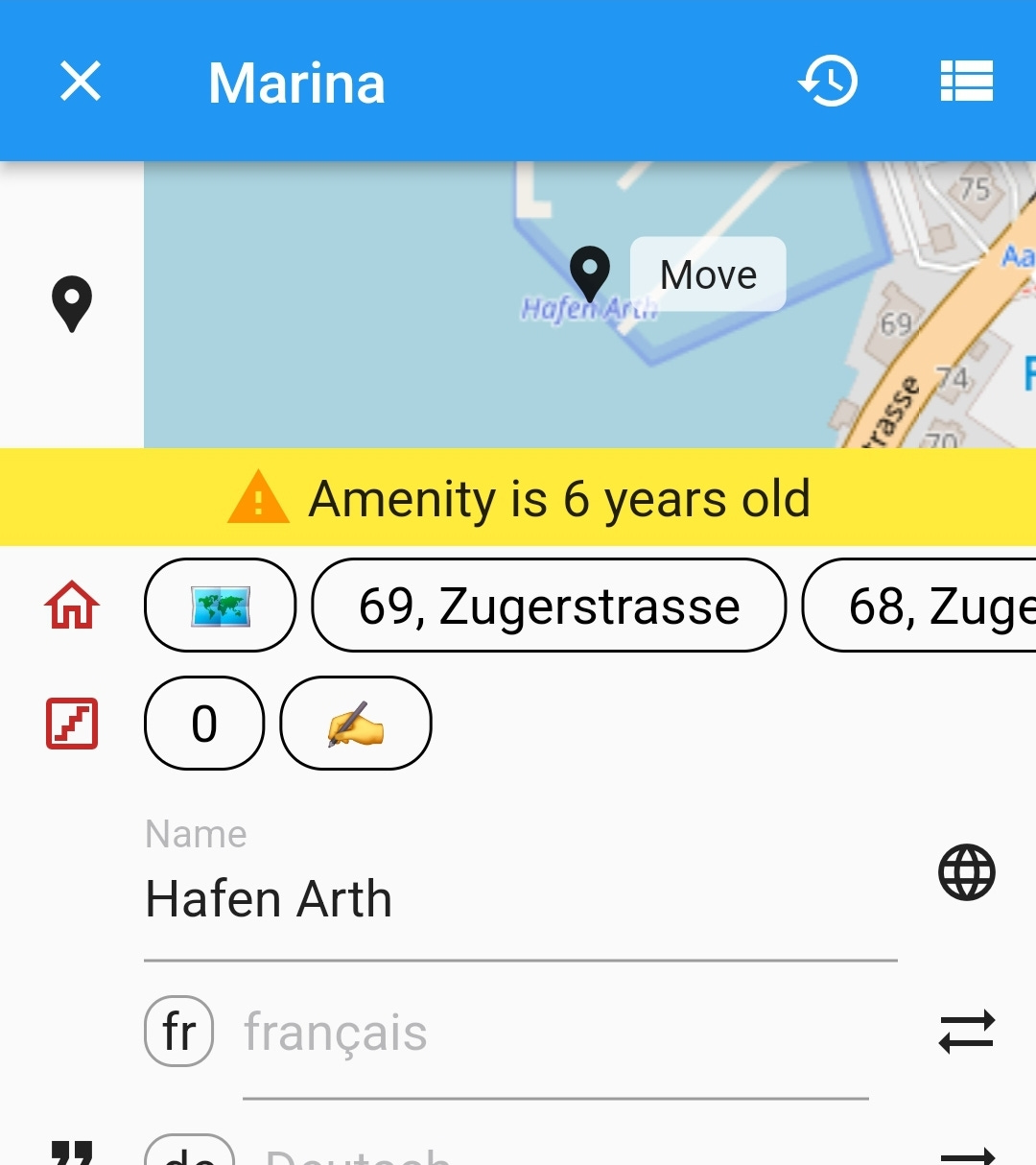

The world itself is editable. It changes naturally, like weather patterns or meandering rivers, but humans also modify it. This is true no only for location of things, but also for the categories (a hospital can become a museum), attributes (the name or open hours change), the shape and size (expanding a building or re-routing a road), or things less tangible (flood risk goes up, a valuation goes down). When humans edit the physical world and its abstract attributes, a map edit is pending. Sometimes nobody knows this edit is pending until it is discovered years later.

In some cases this could be solved with sensors. There are fixed sensors, and mobile sensors. A fixed sensor like a webcam can be rather easily analyzed to update a map, and indicate when a blue building becomes painted red, or a new crosswalk appears on the street. A mobile sensor like a satellite, either birds-eye view or oblique, might be able to find much of the same data. Another mobile sensor like a street view camera—passing through at a random time and not regularly unless mounted on the weekly garbage truck or daily postal delivery—could see many things not visible from a fixed webcam or a distant orbiting camera.

Intelligent edits

These sensors are not intelligent, however. A post-processing, such as simply segmenting and labeling images, or using a monocular depth estimation algorithm, or Structure from Motion, can help bring more information than the raw data alone provides. Using AI to really deeply analyze the sensor data can lead to making more ambitious edits, like guessing that a business is closed if the webcam did not see the door open in 8 weeks. Guesses and predictions have a probability attached to them, and it is almost never 100%.

If we could invent a magical sensor, let’s call it an AI guardian, we could attach it to any place or thing on the planet. Thing of it as a silent drone equipped with LiDAR, a camera, and a small processing brain. Something like quantum entanglement could emerge here: the guardian would notice anything and everything that changes about the scene or object, and sync this reality immediately to the indexed database of reality (the map).

This would be a decentralized and distributed monitoring system—or surveillance even—and we might say omnipresent and omniscient, almost mythical and godlike.

A Sisyphean community

Perhaps this globally ubiquitous monitoring system is what a crowdsourcing community is, including users who are casual, who tie it to their business, who come from academia, who use it in their everyday routines. The OpenStreetMap community is quite skilled at keeping some select parts of the planet up to date, but almost never in perfect detail. In the rest of the world, an active user only rarely shows up, even if making thorough edits once every few months or years.

No one can expect a community to be an aggregate mythical hero who always edits the map to reflect reality without fail. It is a task for Sisyphus, the Greek king said to have been sentenced by the gods to forever roll an immense stone up a steep hill, only to let it roll down and do it again. For longtime contributors to a map project, this may be a familiar feeling—as well as to those who professionally work on keeping an up to date world model.

Synchronizing on the go

People who edit OpenStreetMap are active editors of the map—there is not a passive method to directly make changes. Other map providers may indeed have more passive methods of updating the maps. However, passivity has its limits. Self-healing maps was a popular term a few years ago, but one which has no materialized, because no system, program, algorithm, or AI agent is able to truly be authoritative in shipping changes to a map. The end of the rope is always what the end user, a human, sees when comparing the map to reality.

Comparing maps to reality happens literally at a glance, when someone looks at their phone for a moment or more, often when doing navigation or search. This is often where inconsistencies are discovered, and only some of the time—when it is convenient or there is sufficient motivation—does the person update the map. Most inconsistencies are probably unreported, only noticed.

A future world may offer something much like Waze for drivers, but more oriented toward people who want to map the world on foot or bicycle. Imagine wearing the legendary AI glasses we all keep hearing about—and having the world around you be painted with warnings and prompts, that it mismatches the map. This building is 40 meters tall, but OSM has no height information—do you want to push an update? The map says there is a park here, but the various benches in your view are not mapped—do you want to confirm their location? You just entered this building—mark a node on OSM for the entrance?

It can, however, become overwhelming. It might be fun to do for an hour or two. It’s something like street complete, but instead of in the phone, the questions and prompts are augmented on the space around you. The world can get quite loud when so little of it is mapped in detail, and once again our augmented reality mapped becomes Sisyphus rolling the geospatial stone.

In another version of this, the world is editable, but only when you want—just like the way most of OpenStreetMap editing works today. Rather than being inundated with all the broken synchronization between reality and the data world, you might occasionally switch it on, or query whether the place you are standing or the thing you are looking at exists in the map, then take action. This is more small scale, even craft-mapping, but it is human scale as well.

Too big world

The world is much bigger than the community of mappers. On a macroscopic to microscopic scale, it is also far too detailed for humans to really map once, much less once per day. So much of it needs to rely on an acceptance of blank spaces—even just a few square meters here and there—and interpolation that assumes any region of the map is some variation or transition of what is nearby.

A perfectly streaming, constantly edited and updated map may be out of reach—it may be infinite zero. The graph may constantly arc toward a map that is more quickly updated and in more detail, but cutting errors in half every year still means never reaching the end.

Plato’s theory of realism may be the only way to truly make peace with this vision of geospatial data. Our human brains and eyes, with that imperfect and fragmented monitoring of our immediate environments, fails to actually see the world as data, and fails to translate it into a true representation of reality. Perhaps the map we make—crowdsourcing, and measuring, and calculating, and modeling—is the most objective index of the earth, a world in data but also which has been validated. The world we sense around us is changeable, not always measurable, and yet subjective.

Mapping into existence

Like the fleeting thoughts we have and never write down, things unmapped, edits not yet made to the model of the earth, may not really exist in the geospatial record of the past, the map that others will reference, until we capture and map them. Without data in the database, scientific studies have no sources, AI has no training data, and maps have blank spaces, showing only emptiness to those who peruse them in the future.

Mapping the world, perhaps, is the only want to prove it exists.